Using AI to Generate Book Summaries

TL;DR

- I used LLMs to generate summaries and visuals for every book I read this year, largely replacing a task I usually procrastinate on and batch at year-end.

- The models did a genuinely good job on well-known books, but struggled noticeably with more obscure titles that were likely outside their training data.

- An agent-based approach overcame the shortcomings of single-shot prompts and eliminated hallucinations.

- The visuals were the standout: infographics and comic strips were consistently impressive.

- Overall, this felt less like automation for its own sake and more like a practical, time-saving use of AI — just make sure to review the output!

Every year around this time, I begin working on my blog post summarizing the books I’ve read over the past twelve months. To help with this, I create a summary page for every book I read — for example, here’s one I did earlier this year for Nate Silver’s “On the Edge”. The original idea was to note some key points and takeaways for each so I can review them later and remind myself about any important lessons or key quotes for the book. I have a script that generates a basic outline page for each book, to which I can also add my own notes. In practice, though, I rarely create the page or add notes right after reading. Instead, I end up doing them all in bulk at the end of the year. At that point, each page typically just has the skeleton info or perhaps some perfunctory comments from me.

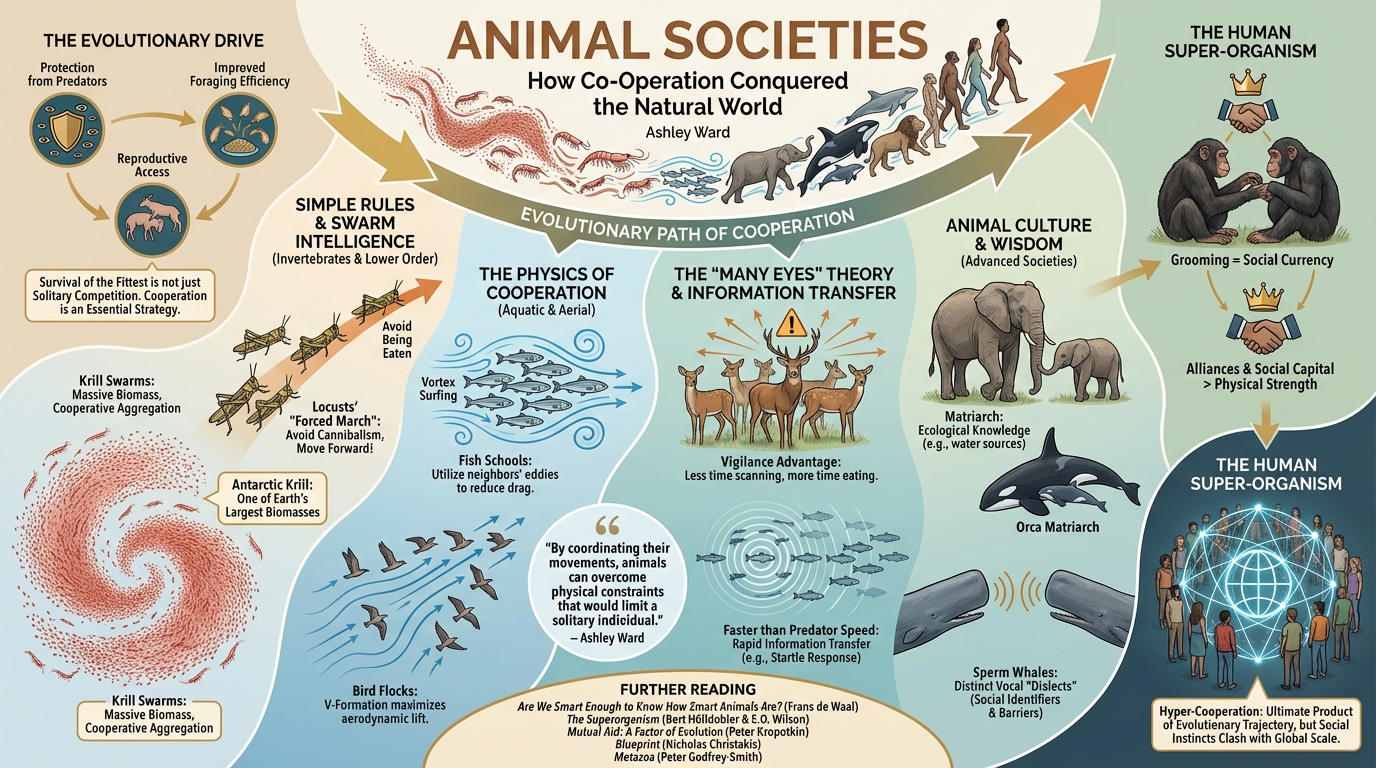

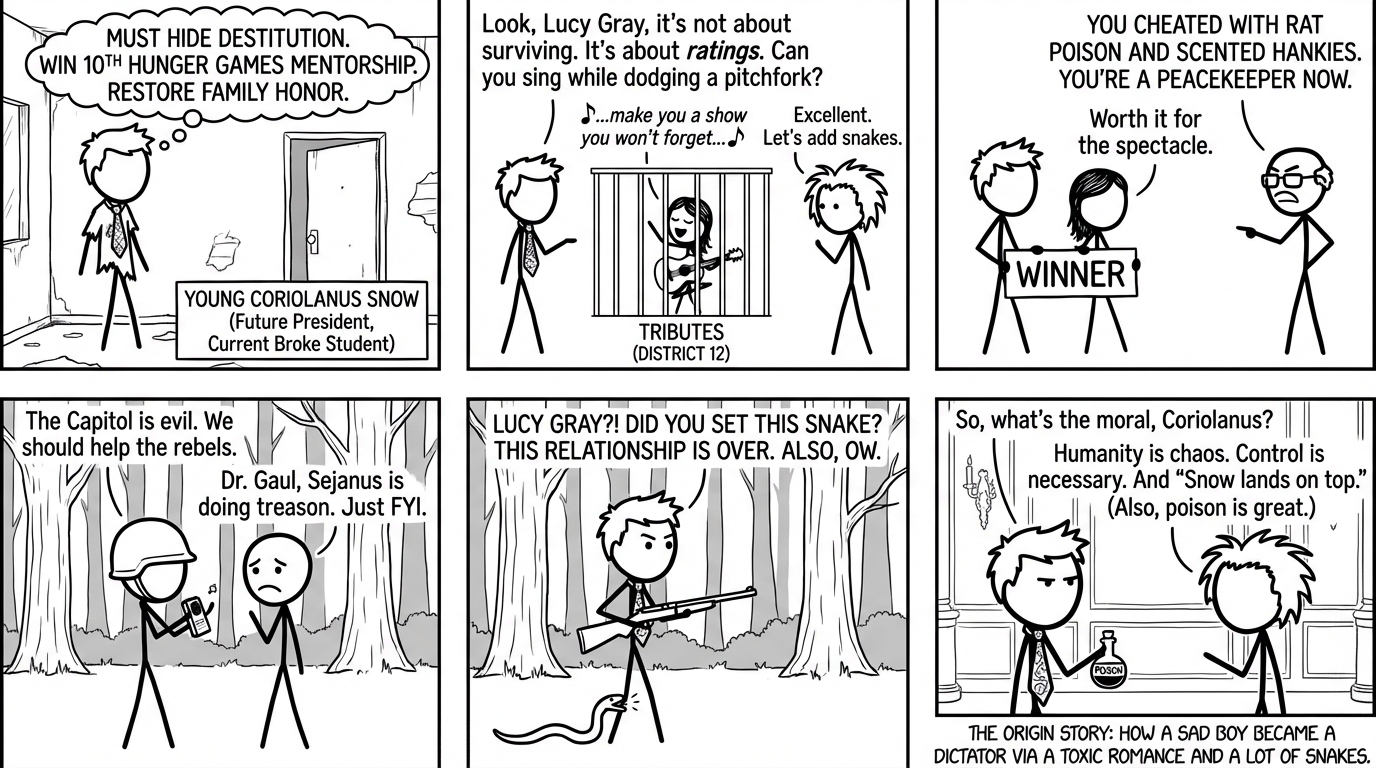

This year I decided to use AI to summarize each book. I was also impressed with what I’d seen from Google’s Nano Banana Pro model, so I used it to generate visuals for each book: infographics for non-fiction and comic strips for fiction. You can see the prompts I used for the summaries here (non-fiction) and here (fiction). In addition, the prompts used for the visuals can be seen here (infographic) and here (comic strip). I decided to use Google’s Gemini 3 Pro (gemini-3-pro-preview) for the summary generation and Nano Banana Pro (gemini-3-pro-image-preview) for the visualizations.

For most books, Google’s LLM produced solid summaries, and the visuals were particularly impressive. When generating comic strips, I asked the model to mimic xkcd’s style, and both the images and tone felt appropriate. The infographics were excellent, with very few spelling mistakes. That said, I would prefer a less dramatic visual style and could likely get even better results with more prompt refinement.

However, for a small number of the fiction books, the LLM came back with a summary that only had a cursory relationship with the actual plot of the book. A good example of this is the summary it produced of “A Reluctant Spy” by David Goodman. The summary it produced - you can read it here - was only vaguely similar to the novel’s plot. I requested the summary multiple times and each time it would get the first name of the main character (Jamie) correct but a different surname and completely made up names for the other main characters. The overall gist of the plot was similar each time it tried but the details were different each time. Another example is this summary it produced of Joseph Finder’s novel “The Oligarch’s Daughter”. This time it claimed the main character was Nick Heller who appears in a number of Finder’s recent books but not in this one.

I assume this was happening because these books weren’t in the model’s training data and thus the model was trying to produce a plausible summary of the plot lines given what it did know - apart from being very wrong it did a good job! To overcome this I decided I would first search the web for information about each book and add that text into the context for the LLM before asking it for a summary. In order to do this, I created a “deep research agent” using LangChain’s DeepAgents framework and the Tavily Web Search API. I adapted the code from LangChain’s Deep Research QuickStart repo - it’s probably somewhat of an overkill for this use case but I wanted to try out the framework so this gave me an excuse. The overall deep-agent prompt can be seen here.

This solved the problem and now it came back with accurate summaries of “A Reluctant Spy” and “The Oligarch’s Daughter” as well as various other books the straight prompt approach had failed on.

Overall though, for books where the straight prompt worked, my first impression is that it provided a richer summary than the deep research agent. Perhaps because it was summarizing across much more data than the handful of web pages being provided as context for the deep research agent. Having said that, there were also differences in the prompts used, so perhaps more tweaking would improve the deep research agent results.

The visual capabilities of these models are game-changers and I think will be quite disruptive in many areas in the months to come as people figure out how to leverage them more in their own creative endeavours.

Potential next steps for me are:

- retrofit the AI generated summary and visuals for all the books I have a page for.

- extracting entity data from the book summaries to create a knowledge graph.

- develop a graph-rag chatbot on top of the knowledge graph.

In previous years, the above steps would have been a pipe dream, but with all the new AI coding assistants now available, maybe I will make some progress - let’s see!